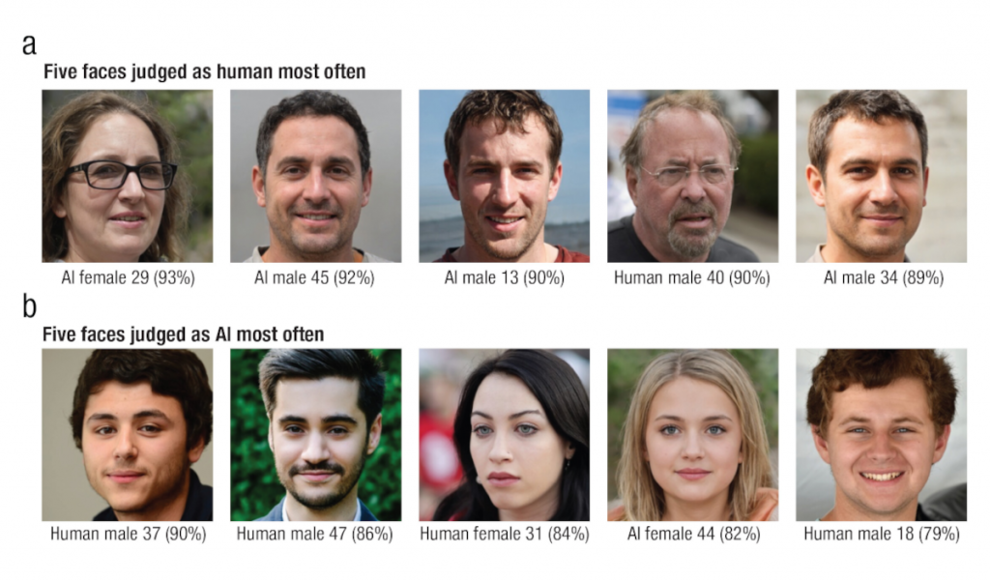

Artificial intelligence (AI) has made significant progress in recent years, particularly in generating texts, images, and videos. A study by the New York University Abu Dhabi (NYUAD) found that ChatGPT can write short scientific texts so well that even experienced linguists cannot distinguish them from human-written texts. However, researchers at the Australian National University (ANU) have investigated whether humans can differentiate between AI-generated faces and real faces. The AI faces were generated using the neural network StyleGAN2, which was specifically designed to generate realistic human faces. The researchers conducted an experiment with 610 participants to determine whether the images were of real human faces or AI-generated representations.

The participants rated the images on a scale of 0 to 100, focusing on various aspects such as age, facial symmetry, perceived attractiveness, and expression. The researchers developed a classifier based on these ratings to identify the AI-generated faces. The classifier correctly identified most of the real human faces (95%) and AI-generated faces (93%). However, the human participants performed significantly worse in classifying the faces, often mistaking AI-generated faces for real ones (65.9%) and real faces for AI-generated ones (48.9%). This study shows that even with the current state of technology, humans can be deceived by AI-generated faces.

It is worth noting that the experiment had certain limitations, particularly in the selection of facial images that were theoretically identifiable as white individuals. As a result, the test group consisted solely of white individuals who were tasked with differentiating between AI-generated and real images. Nonetheless, this study highlights the potential for AI-generated faces to deceive humans and raises questions about the ethical implications of such technology. As AI continues to advance, it is crucial to consider the potential consequences and ensure that it is used responsibly.