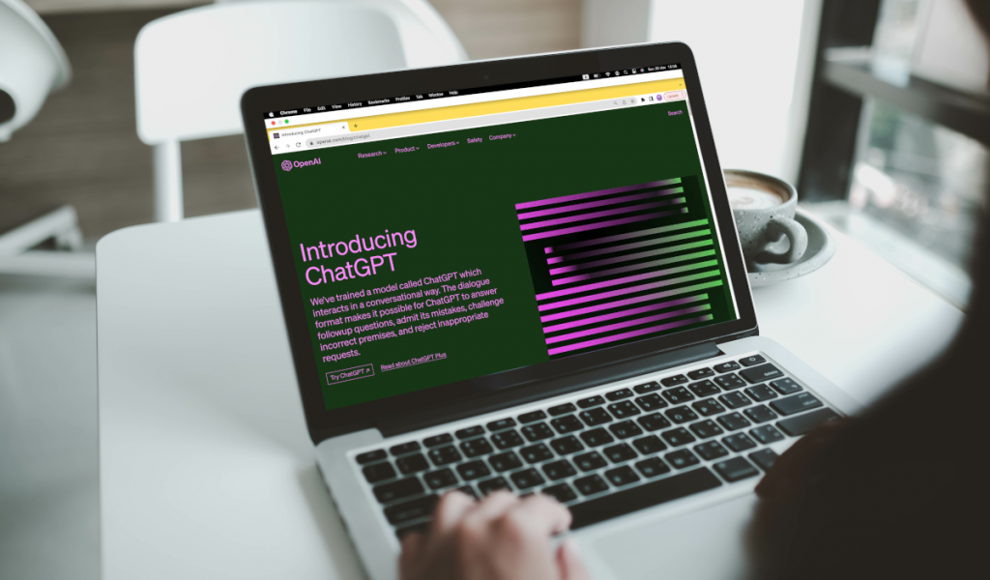

Artificial Intelligence (AI) ChatGPT has been proven to outperform humans in many areas. However, a researcher is convinced that the Large Language Model (LLM) is not truly “intelligent” due to certain limitations. In recent months, studies have shown that the LLM ChatGPT can outperform students in exams and write scientific texts that linguists cannot distinguish from human texts. As a result, there is a general assumption that AI is already as intelligent as humans and can revolutionize society. However, Anthony Chemero, a professor of philosophy and psychology at the University of Cincinnati (UC), has published a paper stating that many people misunderstand AI. According to him, a KI like ChatGPT cannot be as intelligent as humans in the same way, even though it is actually intelligent and can even lie.

According to the publication in the journal Nature Human Behaviour, ChatGPT is only an LLM trained with large amounts of data from the internet. The training data of the AI thus contains many of the biases of humans that were taken over uncontrollably. LLMs generate impressive text but often invent things out of thin air. They learn to produce grammatically correct sentences but require much more training than humans. They actually do not know what the things they say mean. LLMs differ from human cognition because they are not embodied. In science, misinformation of AI, such as freely invented facts, is called hallucination. The errors occur because LLMs form sentences by repeatedly adding the statistically most likely next word. ChatGPT and similar techniques do not check whether the sentences are true. Therefore, Chemero believes that instead of hallucinating, the term “bullsh*tting” is more appropriate.

Furthermore, the researcher believes that LLMs are not intelligent in the same way as humans because humans are embodied. According to him, real intelligence is only possible in living beings because they are always surrounded by other people and material and cultural environments that influence them. His core message is that LLMs are not intelligent like humans because they do not give a damn about their results. Humans, on the other hand, do not have this negative trait. Things are important to us. We are committed to our survival. We care about the world we live in.